Contents:

Objective and Risk Analysis Pipeline

Risk Analysis Pipeline Details

Consolidated Important Risk Factors: o1-preview Model

Consolidated Important Risk Factors: o1-mini Model

Apply a Risk Mitigation Strategy Framework: Example using COSO ERM Framework

Get Started

OpenAI’s o1 model model series, currently including o1-preview and o1-mini, are cutting-edge reasoning engines. Unlike standard language models, they're optimized to handle complex, multi-step challenges that go beyond simple text generation, offering enhanced capabilities for problem-solving and logical reasoning. Additionally, these models include built-in mechanisms ideal for risk analysis, helping to ensure more reliable and safe outputs in critical decision-making tasks.

The o1 model series achieves this by optimizing the reasoning approach. Similarly to management consulting companies, these models break a problem down into smaller parts and "think" over a detailed approach. To come up with an optimized plan, these models can spend quite a bit of time achieving both an optimal approach and optimal outcome. Because these models can take many seconds (sometimes up to 35+ seconds) to return a reasoning answer, it does not make them ideal for real-time Generative AI scenarios like chat interfaces. However, these specific o1 models are ideal for business process workflows & pipelines where the better approach and outcome are sufficiently important.

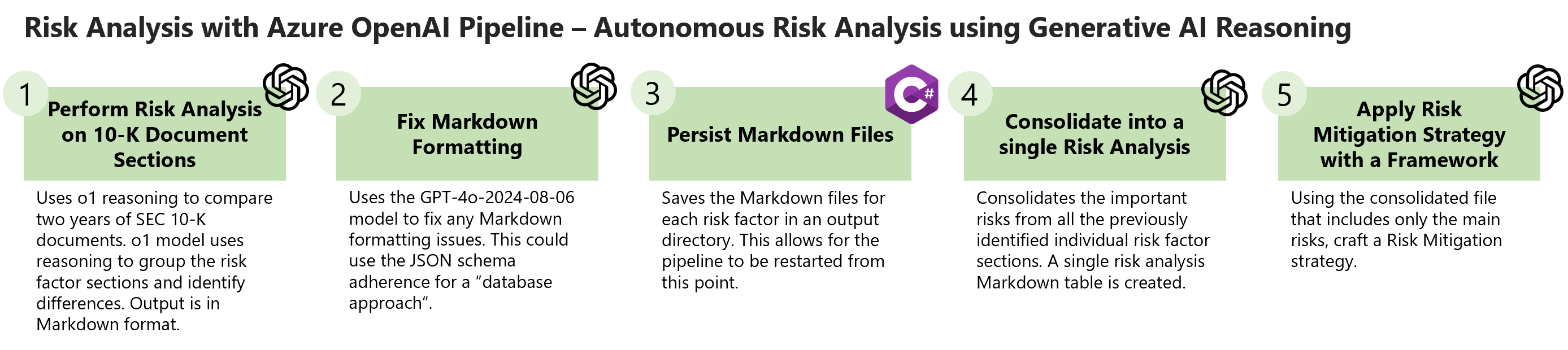

This repository includes a C# Console application that will orchestrate the following Risk Analysis Pipeline below:

The pipeline in the repository performs Risk Analysis over SEC 10-K documents. In simple terms, the 10-K is a big report that gives investors a clear picture of how well a public company is doing. These reports must be filed with the Securities and Exchange Commission (SEC) annually. Companies also have to disclose any potential risks they face in the 10-K, such as economic challenges, competition, legal issues, or changes in regulations. This helps investors understand what could go wrong with the company and how those risks might affect its future performance. These reports are typically analyzed by: investors, journalists, regulators, credit rating agencies, competitors etc. This makes a 10-K report ideal to validate if Generative AI advanced reasoning can be applied to risk analysis.

- The following two Microsoft SEC 10-k Documents are used from 2023 and 2024. Links below illustrate the Risk Factors areas:

The image below illustrates how each Risk Factor section in the SEC 10-K documents is compared, analyzed by Generative AI. The identified differences are materialized as Markdown table files. This allows for experts to analyze the intermediate step and the workflow to begin from this state; bypassing the initial processing.

Navigate to the Risk Factor Analysis Sections: https://github.com/bartczernicki/RiskAnalysisWithOpenAIReasoning/tree/master/RiskAnalysisWithOpenAIReasoning/Output/o1-preview

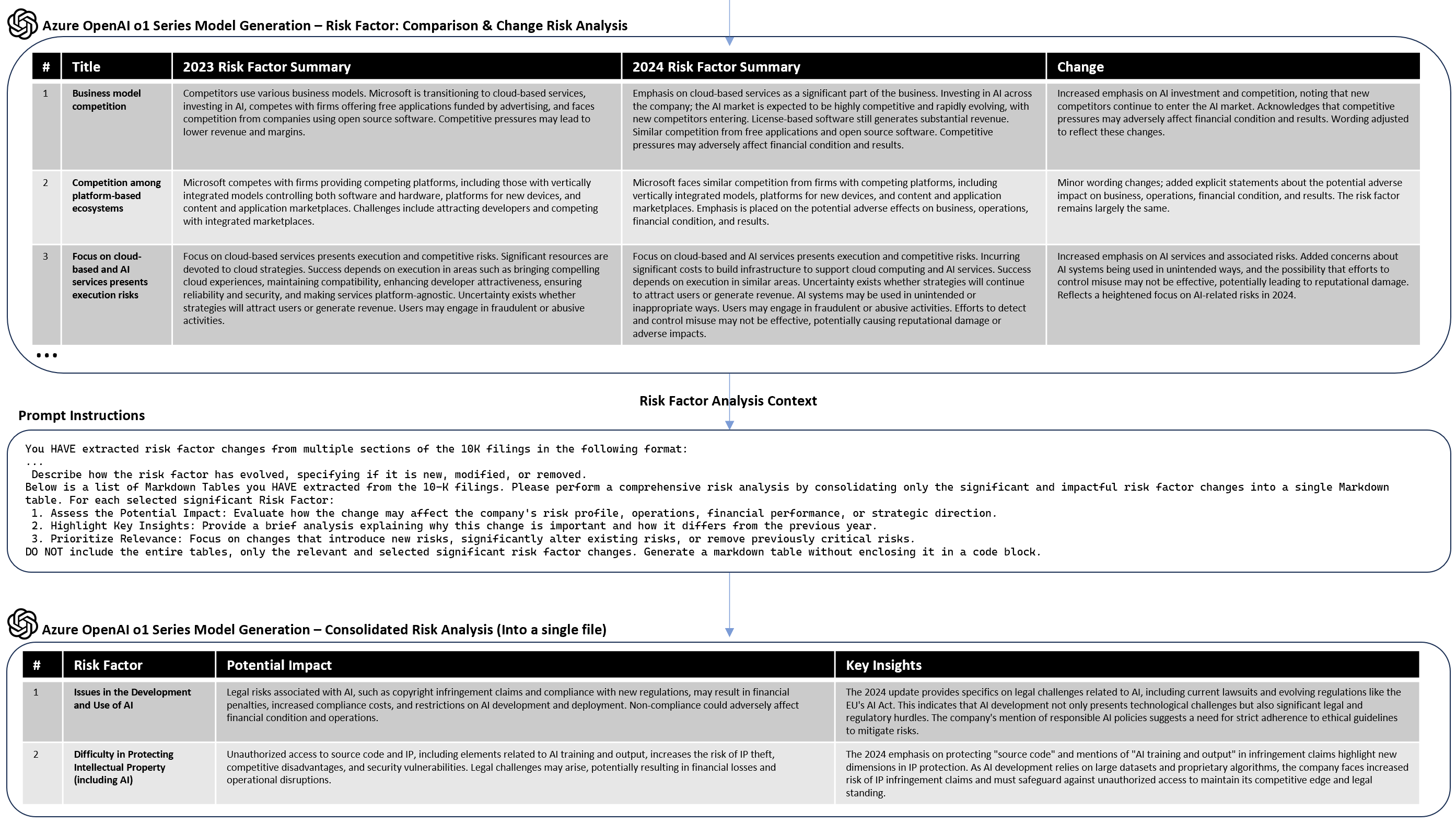

Notice that each section contains several groups and certain risk factors do not change much (minor wording). An optimization would be to surface only the sufficiently important risk factor changes and persist the minor changes as intermediate steps. Therefore, in the next pipeline step, all of the generated 10-K Risk Factor sections are then consolidated into a single analysis that only surfaces the relevant risks into a single file. Examples of the single consolidated risk analysis are shown below for each respective o1 series model.

Note

Currently this application has "hard-coded" the SEC 10-K documents in a Data object. This was done to simplify and focus on the Generative AI risk analysis. There are many solutions like Azure Document Intelligence, that can provide the document segmantation to extract the Risk Factor sections dynamically.

Full analysis details: https://github.com/bartczernicki/RiskAnalysisWithOpenAIReasoning/tree/master/RiskAnalysisWithOpenAIReasoning/Output/o1-preview

Below is a consolidated analysis of the significant and impactful risk factor changes between the 2023 and 2024 10-K filings.

| No. | Risk Factor | Potential Impact | Key Insights |

|---|---|---|---|

| 1 | Security of our Information Technology | The disclosure of actual cyber incidents, including a specific nation-state attack, indicates an increased risk to the company's IT systems. This can lead to further unauthorized access, potential data breaches, harm to reputation, legal or regulatory penalties, and increased costs for cybersecurity measures. | The 2024 emphasis on actual cyber incidents, such as the November 2023 attack, highlights that threats have materialized. This change reflects a shift from potential risks to realized risks, underscoring the urgency to strengthen cybersecurity defenses and address vulnerabilities. The mention of unauthorized access to customer data also heightens the potential for legal and regulatory consequences. |

| 2 | Security of our Products, Services, and Customers' Data | Cyberattacks on IT systems affecting customers can erode customer trust, damage relationships, and result in financial losses. There are potential legal liabilities if customer data is compromised. Also, sophisticated adversaries exploiting AI features might introduce unanticipated security threats. | The 2024 update emphasizes that attacks have impacted customers, not just the company, illustrating a broader scope of risk. Referencing the nation-state attack demonstrates the severity of threats faced. The acknowledgment of AI-related security concerns indicates that as the company integrates AI into products, it must proactively address new vulnerabilities that sophisticated adversaries might exploit. |

| 3 | Disclosure and Misuse of Personal Data | Increased risk of insider threats may result in unauthorized disclosure or misuse of customer and user data, leading to reputational damage, legal liabilities, and financial losses. Failure to mitigate insider threats can adversely affect business operations and financial performance. | The 2024 addition of insider threats highlights internal vulnerabilities in data security practices. This shift signifies that threats are not only external but also internal. Addressing insider threats requires additional controls, employee training, and monitoring, which may increase operational costs but are necessary to protect personal data and comply with regulations. |

| 4 | Risks from Products, Services, and Third-Party Interactions | Broadening the risk factor to all products and services increases the complexity of managing security, privacy, and execution risks. Inappropriate use or defects in products can lead to legal claims, regulatory actions, and harm to individuals or businesses, negatively impacting financial results and operations. | By expanding the scope beyond IoT to include all products, services, and third-party interactions, the company acknowledges a wider range of potential risks. This change indicates a need for comprehensive risk management strategies covering various use cases, especially as products are integrated into high-risk scenarios or combined with third-party solutions. It underscores the importance of quality control and reliability across all offerings. |

| 5 | Issues in the Development and Use of AI | Legal risks associated with AI, such as copyright infringement claims and compliance with new regulations, may result in financial penalties, increased compliance costs, and restrictions on AI development and deployment. Non-compliance could adversely affect financial condition and operations. | The 2024 update provides specifics on legal challenges related to AI, including current lawsuits and evolving regulations like the EU's AI Act. This indicates that AI development not only presents technological challenges but also significant legal and regulatory hurdles. The company's mention of responsible AI policies suggests a need for strict adherence to ethical guidelines to mitigate risks. |

| 6 | Damage to Reputation Related to AI and Cybersecurity Incidents | Negative perceptions stemming from AI development and deployment, responsible AI failures, and cybersecurity incidents can harm the company's global reputation and brands. This may lead to loss of customer trust, decreased sales, and challenges in talent acquisition and retention. | The inclusion of "development and deployment of AI" and "cybersecurity incidents" as potential reputation-damaging factors in 2024 reflects heightened public and stakeholder scrutiny. It underscores the importance of responsible AI practices and robust cybersecurity measures to maintain the company's reputation and competitive position in the market. |

| 7 | Difficulty in Protecting Intellectual Property (including AI) | Unauthorized access to source code and IP, including elements related to AI training and output, increases the risk of IP theft, competitive disadvantages, and security vulnerabilities. Legal challenges may arise, potentially resulting in financial losses and operational disruptions. | The 2024 emphasis on protecting "source code" and mentions of "AI training and output" in infringement claims highlight new dimensions in IP protection. As AI development relies on large datasets and proprietary algorithms, the company faces increased risk of IP infringement claims and must safeguard against unauthorized access to maintain its competitive edge and legal standing. |

| 8 | Government Litigation and Regulatory Activity (EU Digital Markets Act) | Compliance with new market regulations like the EU Digital Markets Act imposes additional obligations on the company's core platform services. This may result in increased compliance costs, operational changes, and limitations on how products are designed and marketed, potentially affecting revenue and profitability. | The 2024 update specifically mentions the EU Digital Markets Act and its designation of Windows and LinkedIn as core platform services subject to new obligations. This indicates significant regulatory changes that could impact the company's operations in the EU, necessitating adjustments in business practices and possibly affecting market competitiveness. |

| 9 | Evolving Sustainability Regulatory Requirements | Failure to comply with new environmental, social, and governance (ESG) laws and regulations, or to achieve sustainability goals, could result in increased costs, legal penalties, and reputational damage. Non-compliance may adversely affect financial performance and investor confidence. | The introduction of this new risk factor in 2024 reflects growing global emphasis on sustainability and ESG considerations. The company's acknowledgment of these risks signals the need to align operations with evolving regulatory requirements and stakeholder expectations to mitigate potential negative impacts on its business and reputation. |

| 10 | Inadequate Operations Infrastructure (AI and Water Supply) | Insufficient infrastructure to support AI features and reliance on critical resources like water may lead to service outages, reduced performance, and inability to meet customer demands. This can result in lost revenue, increased costs, and adverse effects on business operations and financial results. | The 2024 addition of AI demands and water supply issues highlights new challenges in scaling infrastructure. As AI services require substantial computational power and cooling, resource limitations could impede service delivery. Addressing these challenges is crucial to ensure reliability and meet the growing demand for AI-integrated products and services. |

| 11 | Investments in AI Products May Not Yield Expected Returns | Significant investments in AI may not result in profitable returns, expected operating margins, or customer adoption. This could adversely affect the company's financial condition, results of operations, and strategic objectives. | The 2024 focus on AI investments reflects the strategic shift towards integrating AI across products and services. However, the high costs associated with AI development and uncertain market acceptance pose risks to achieving desired financial outcomes. This underscores the need for careful investment and market analysis to optimize returns. |

| 12 | Acquisitions Facing Legal Challenges Post-Completion | Legal challenges to acquisitions, even after completion, may result in altered terms, unwinding of transactions, or operational disruptions. This can lead to additional costs, integration difficulties, and adverse impacts on business operations and financial results. | The 2024 mention of ongoing FTC challenges to completed acquisitions, like Activision Blizzard, indicates that regulatory scrutiny can continue post-acquisition. This increases the risk of strategic uncertainties and potential financial liabilities, highlighting the importance of thorough regulatory compliance and risk assessment during the acquisition process. |

Full analysis details: https://github.com/bartczernicki/RiskAnalysisWithOpenAIReasoning/tree/master/RiskAnalysisWithOpenAIReasoning/Output/o1-mini

Below is a consolidated analysis of the significant and impactful risk factor changes between the 2023 and 2024 10-K filings.

| Title | Change | Potential Impact | Key Insights |

|---|---|---|---|

| Cyberattacks and Security Vulnerabilities | Modified: Added specific incident details and increased focus on legal and regulatory risks. | Increased legal and regulatory scrutiny may lead to higher compliance costs, potential fines, and more stringent requirements for cybersecurity measures. This can affect the company's financial performance and reputation. | Highlighting a nation-state threat incident underscores the evolving nature of cyber threats and the heightened legal implications, emphasizing the need for robust security frameworks and proactive risk management. |

| Protection and Utilization of Intellectual Property | Modified: Included protection of source code as part of IP challenges. | Enhanced risks around IP protection, especially source code, could lead to increased vulnerability to theft, reverse engineering, and competitive disadvantages, potentially affecting revenue and innovation capabilities. | Emphasizing source code protection reflects the critical importance of safeguarding core software assets, ensuring competitive advantage, and maintaining customer trust in the company's technology solutions. |

| Sustainability Regulations | New: Introduction of sustainability regulatory requirements and related risks. | Compliance with new sustainability regulations may incur significant costs, require operational changes, and impact financial performance. Failure to meet sustainability goals could result in legal penalties and reputational damage. | The addition of sustainability risks highlights the growing importance of ESG factors, aligning with global trends towards environmental responsibility and potentially influencing investor and consumer perceptions. |

| Data Privacy and Personal Data Regulations | Modified: Included additional references to the EU AI Act and other specific digital regulations. | Stricter data privacy laws and AI-specific regulations can increase compliance costs, limit data usage, and restrict product functionalities, potentially reducing competitiveness and innovation while increasing the risk of hefty fines for non-compliance. | Incorporating AI-related data privacy regulations reflects the intersection of AI advancements with data protection, necessitating comprehensive compliance strategies to navigate complex regulatory landscapes and safeguard user data. |

| Competition Laws and Enforcement | Modified: Added specifics regarding the EU Digital Markets Act and designated core platform services like LinkedIn. | Enhanced regulatory obligations under the EU Digital Markets Act could restrict business practices, limit market strategies, and lead to fines or operational changes, affecting growth and market positioning. | Detailing specific regulations such as the EU Digital Markets Act provides clarity on the regulatory challenges faced, emphasizing the need for strategic adjustments to comply with new legal standards and maintain market access. |

| Claims and Lawsuits | Modified: Expanded to include AI services and additional examples of potential claims. | Increased exposure to AI-related legal claims can result in higher legal costs, potential damages, and operational restrictions, impacting financial stability and strategic initiatives in AI development. | Addressing AI-specific legal risks underscores the importance of developing responsible AI systems and proactively managing potential legal challenges associated with AI deployments and innovations. |

| Legal and Regulatory Requirements | Modified: Included specific references to AI Act and content moderation regulations. | Compliance with AI and content moderation laws may require significant operational adjustments, increased expenditures on regulatory compliance, and could limit certain business activities, affecting overall business agility and profitability. | Highlighting AI and content moderation regulations emphasizes the critical need for governance frameworks that ensure compliance while fostering innovation, balancing regulatory adherence with business growth objectives. |

| Data Insights and Regulatory Constraints | Modified: Added specific mentions of AI services and reinforced the connection between data insights and regulatory constraints. | Regulatory constraints on data usage for AI services can limit data-driven innovation, increase compliance costs, and restrict monetization opportunities, potentially affecting the company's competitive edge and revenue streams. | Linking data insights with AI service regulations highlights the dependency of AI advancements on data availability and usage rights, stressing the need for strategic data management and compliance to leverage AI effectively without regulatory hindrances. |

OpenAI o1 reasoning models can not only can craft a multi-step risk analysis, but can also apply a risk mitigation strategy framework to draft a strategic approach. Below is a sample output using the OpenAI o1 series to apply the COSO ERM Framework on the consolidated risk analysis from the Microsoft SEC 10-K Documents:

Applying the COSO ERM Framework to Mitigate Identified Risks

Introduction

The Committee of Sponsoring Organizations of the Treadway Commission (COSO) Enterprise Risk Management (ERM) framework provides a comprehensive approach to identifying, assessing, and managing risks within an organization. By applying this framework to the consolidated risk analysis, Microsoft can develop effective strategies to mitigate potential adverse effects on its operations, financial performance, and strategic objectives.

The COSO ERM framework encompasses five interrelated components:

- Governance and Culture

- Strategy and Objective-Setting

- Performance

- Review and Revision

- Information, Communication, and Reporting

Below are recommended risk mitigation strategies for each identified risk, aligned with the COSO ERM framework components.

Risk: Increased cyber threats, including nation-state attacks, leading to unauthorized access, data breaches, reputational harm, legal penalties, and higher cybersecurity costs.

Governance and Culture

- Strengthen Cybersecurity Governance: Establish a dedicated cybersecurity oversight committee at the board level to ensure cybersecurity risks are prioritized.

- Promote a Security-First Culture: Foster an organizational culture where cybersecurity is everyone's responsibility through regular training and awareness programs.

Strategy and Objective-Setting

- Align Cybersecurity with Business Strategy: Integrate cybersecurity considerations into strategic planning processes, ensuring alignment with organizational objectives.

- Define Risk Appetite: Clearly articulate the acceptable level of cybersecurity risk and ensure it guides decision-making.

Performance

- Enhance Cybersecurity Infrastructure: Invest in advanced security technologies such as AI-driven threat detection and zero-trust architectures.

- Conduct Regular Assessments: Perform frequent vulnerability assessments and penetration testing to identify and remediate security gaps.

- Third-Party Risk Management: Implement rigorous vendor risk assessments and require compliance with security standards.

Review and Revision

- Continuous Improvement: Regularly update cybersecurity policies and procedures to address evolving threats.

- Incident Response Planning: Develop and test robust incident response plans to ensure rapid and effective action during breaches.

Information, Communication, and Reporting

- Transparent Reporting: Communicate cybersecurity policies and incidents to stakeholders as appropriate.

- Employee Engagement: Encourage reporting of suspicious activities and foster open communication about security concerns.

Risk: Cyberattacks affecting customers, erosion of trust, potential legal liabilities, and exploitation of AI features by adversaries.

Governance and Culture

- Implement Responsible AI Governance: Establish ethical guidelines and oversight for AI development and deployment.

- Customer Data Protection Policies: Reinforce policies that prioritize the security and privacy of customer data.

Strategy and Objective-Setting

- Secure Development Lifecycle: Integrate security and privacy controls throughout the product development process.

- Risk Assessment of AI Features: Evaluate AI functionalities for potential security vulnerabilities and misuse.

Performance

- Enhance Product Security: Employ advanced encryption, access controls, and anomaly detection in products and services.

- Monitor AI Systems: Use continuous monitoring to detect and mitigate AI system exploitation.

Review and Revision

- Product Security Reviews: Regularly assess products for security risks and update them accordingly.

- Adapt to Emerging Threats: Stay abreast of new attack vectors, especially those targeting AI systems.

Information, Communication, and Reporting

- Customer Communication: Provide clear information on security features and protocols to customers.

- Collaborate with Security Communities: Engage with industry groups to share intelligence and best practices.

Risk: Insider threats leading to unauthorized data disclosure, reputational damage, legal liabilities, and financial losses.

Governance and Culture

- Establish an Insider Threat Program: Develop policies and procedures to detect, deter, and respond to insider risks.

- Foster Ethical Standards: Promote a culture of integrity and trust, emphasizing the importance of data protection.

Strategy and Objective-Setting

- Implement Least Privilege Access: Restrict data access to only those employees who require it for their roles.

- Deploy Data Loss Prevention (DLP) Tools: Utilize DLP technologies to monitor and control the movement of sensitive data.

Performance

- Behavioral Monitoring: Use analytics to detect unusual employee activities that may indicate insider threats.

- Regular Training: Educate employees on data handling, privacy laws, and the consequences of data misuse.

Review and Revision

- Policy Updates: Periodically review and update insider threat policies to reflect changing risks.

- Incident Analysis: Learn from past incidents to improve controls and prevent recurrence.

Information, Communication, and Reporting

- Anonymous Reporting Channels: Provide secure avenues for employees to report suspicious behavior.

- Clear Communication of Expectations: Ensure all staff understand policies regarding data security and privacy.

Risk: Complexity in managing security and privacy across all products and services, leading to potential legal claims and operational impacts.

Governance and Culture

- Comprehensive Risk Management Framework: Expand risk management to cover all products and services, including third-party interactions.

- Vendor Management Policies: Develop stringent criteria for selecting and monitoring third-party partners.

Strategy and Objective-Setting

- Integrate Risk Assessments: Incorporate security and privacy risk assessments into product development and service delivery processes.

- Set Quality Standards: Define and enforce quality benchmarks for all offerings.

Performance

- Rigorous Testing: Conduct extensive testing, including security, functionality, and interoperability tests before deployments.

- Third-Party Audits: Require third-party providers to undergo regular security audits and comply with company standards.

Review and Revision

- Feedback Loops: Gather customer and stakeholder feedback to identify areas for improvement.

- Adapt to New Risks: Update risk management strategies in response to technological changes and emerging threats.

Information, Communication, and Reporting

- Transparency with Stakeholders: Maintain open communication about product risks and mitigation efforts.

- Incident Disclosure Policies: Establish protocols for timely disclosure of product defects or security issues.

Risk: Legal challenges such as copyright infringement and compliance with evolving AI regulations, resulting in financial and operational impacts.

Governance and Culture

- Establish AI Ethics Committee: Create a dedicated body to oversee AI development, ensuring compliance with laws and ethical standards.

- Promote Legal Awareness: Educate teams on legal considerations related to AI, including intellectual property rights.

Strategy and Objective-Setting

- Regulatory Compliance Strategy: Develop plans to comply with current and anticipated AI regulations globally.

- Risk Assessments for AI Projects: Evaluate potential legal risks before initiating AI projects.

Performance

- Ethical AI Development Practices: Implement protocols to ensure AI models are trained on legally obtained data and respect IP rights.

- Monitor Legal Developments: Stay informed about legal cases and regulatory changes affecting AI.

Review and Revision

- Policy Revisions: Update AI development policies to reflect new legal requirements and industry best practices.

- Audit AI Systems: Periodically review AI systems for compliance and ethical considerations.

Information, Communication, and Reporting

- Engage with Regulators: Participate in dialogues with regulatory bodies to influence and prepare for regulatory changes.

- Stakeholder Communication: Keep stakeholders informed about AI initiatives and compliance efforts.

Risk: Negative perceptions from AI deployments and cybersecurity incidents harming reputation, customer trust, and talent acquisition.

Governance and Culture

- Brand Protection Strategy: Develop a proactive approach to manage reputation risks, including monitoring and response plans.

- Emphasize Responsible Practices: Integrate responsible AI and cybersecurity practices into the company's core values.

Strategy and Objective-Setting

- Prioritize Trust-Building: Set objectives that focus on building and maintaining trust with customers and the public.

- Crisis Management Planning: Prepare for potential incidents with established communication and remediation plans.

Performance

- Deliver High-Quality Products: Ensure products and services meet high standards for security and reliability to prevent incidents.

- Public Relations Efforts: Engage in positive storytelling and transparency to enhance brand image.

Review and Revision

- Analyze Incidents for Improvement: Use past events as learning opportunities to strengthen defenses and response strategies.

- Adapt to Public Concerns: Stay attuned to societal expectations and adjust practices accordingly.

Information, Communication, and Reporting

- Open Communication: Be transparent during incidents, providing timely and accurate information to stakeholders.

- Stakeholder Engagement: Foster relationships with customers, investors, and communities to build goodwill.

Risk: Unauthorized access and infringement of IP and source code, including AI-related elements, leading to competitive disadvantages and legal challenges.

Governance and Culture

- IP Protection Policies: Enforce strict policies on handling and sharing proprietary information.

- Employee Training on IP Security: Educate staff on the importance of IP protection and their role in safeguarding company assets.

Strategy and Objective-Setting

- Implement Access Controls: Restrict access to source code and sensitive materials to authorized personnel only.

- Legal Enforcement Plans: Prepare to take legal action against IP infringements to deter potential violators.

Performance

- Secure Development Environments: Use secure coding practices and environments to prevent unauthorized access.

- Monitor for IP Breaches: Deploy tools to detect potential IP theft or unauthorized disclosures.

Review and Revision

- Regular Security Audits: Assess IP protection measures periodically and address identified weaknesses.

- Update Agreements: Ensure contracts with employees and partners include robust IP protection clauses.

Information, Communication, and Reporting

- Incident Reporting Mechanisms: Encourage reporting of suspected IP breaches.

- Compliance Reporting: Adhere to reporting requirements related to IP protection.

Risk: New regulations imposing obligations and operational changes, affecting revenue and profitability.

Governance and Culture

- Regulatory Compliance Oversight: Assign dedicated teams to monitor regulatory environments and ensure compliance.

- Culture of Adaptability: Encourage flexibility and responsiveness to regulatory changes across the organization.

Strategy and Objective-Setting

- Align Business Models with Regulations: Adjust business strategies to comply with regulations while seeking to maintain competitive advantages.

- Risk Assessment and Planning: Anticipate regulatory impacts and develop contingency plans.

Performance

- Implement Compliance Programs: Develop processes and controls to meet new regulatory requirements.

- Employee Training: Educate staff on how regulations affect their roles and responsibilities.

Review and Revision

- Monitor Compliance Effectiveness: Regularly evaluate compliance efforts and make improvements.

- Engage in Policy Discussions: Participate in industry dialogues to influence and prepare for regulatory developments.

Information, Communication, and Reporting

- Regulator Communication: Maintain open channels with regulators to ensure understanding and compliance.

- Transparent Disclosures: Inform stakeholders about regulatory impacts and response strategies.

Risk: Failure to meet ESG laws and sustainability goals, leading to increased costs, penalties, and reputational damage.

Governance and Culture

- Sustainability Leadership: Appoint senior leaders responsible for ESG initiatives and compliance.

- Embed Sustainability in Culture: Promote sustainable practices and values among all employees.

Strategy and Objective-Setting

- Set Clear ESG Goals: Define measurable sustainability targets aligned with regulations and stakeholder expectations.

- Integrate ESG into Strategy: Incorporate ESG considerations into business planning and decision-making.

Performance

- Implement Sustainable Practices: Adopt operational changes to reduce environmental impact and enhance social governance.

- Measure and Report Progress: Use key performance indicators (KPIs) to track ESG performance.

Review and Revision

- Regular ESG Assessments: Periodically evaluate sustainability efforts and adjust strategies accordingly.

- Benchmarking: Compare performance against industry standards and best practices.

Information, Communication, and Reporting

- Transparent ESG Reporting: Provide comprehensive disclosures on ESG activities and progress.

- Stakeholder Engagement: Involve stakeholders in sustainability initiatives and gather feedback.

Risk: Insufficient infrastructure and reliance on critical resources like water, leading to service disruptions and inability to meet demand.

Governance and Culture

- Infrastructure Oversight: Establish committees to oversee infrastructure development and resource management.

- Resource Consciousness: Promote awareness of resource consumption and environmental impacts.

Strategy and Objective-Setting

- Long-Term Infrastructure Planning: Develop strategic plans to expand and enhance infrastructure supporting AI capabilities.

- Resource Diversification: Explore alternative technologies and resources to mitigate dependency risks.

Performance

- Invest in Sustainable Infrastructure: Allocate resources to build efficient and resilient infrastructure, incorporating green technologies.

- Optimize Resource Usage: Implement practices to reduce water and energy consumption in operations.

Review and Revision

- Assess Infrastructure Needs: Regularly evaluate infrastructure requirements in line with technological advancements and demand forecasts.

- Update Contingency Plans: Prepare for resource shortages or outages with backup systems and suppliers.

Information, Communication, and Reporting

- Communicate Infrastructure Initiatives: Keep stakeholders informed about efforts to strengthen infrastructure and resource management.

- Compliance with Environmental Reporting: Adhere to regulations regarding resource usage disclosures.

Risk: Significant investments in AI not delivering profitable returns or customer adoption, affecting financial condition and objectives.

Governance and Culture

- Investment Oversight: Create governance structures to evaluate and monitor the performance of AI investments.

- Balance Innovation with Prudence: Encourage innovative projects while maintaining financial discipline.

Strategy and Objective-Setting

- Market Research: Conduct thorough analyses to understand customer needs and market demand for AI products.

- Align with Core Competencies: Focus AI investments on areas where the company has expertise and competitive advantages.

Performance

- Pilot Programs: Test AI products with limited releases to gather feedback and refine offerings.

- Cost Management: Monitor expenses closely and seek efficiencies in AI development processes.

Review and Revision

- Evaluate ROI: Regularly assess the return on investment for AI projects and reallocate resources as needed.

- Adjust Strategies: Be willing to pivot or discontinue investments that are not meeting objectives.

Information, Communication, and Reporting

- Stakeholder Transparency: Provide updates on AI investments and their performance to stakeholders.

- Internal Knowledge Sharing: Share insights and lessons learned across the organization to improve future projects.

Risk: Post-acquisition legal challenges leading to altered terms, unwinding transactions, or operational disruptions.

Governance and Culture

- Strengthen M&A Oversight: Enhance due diligence processes and legal reviews for acquisitions.

- Regulatory Awareness: Cultivate an understanding of antitrust laws and regulatory environments among leadership.

Strategy and Objective-Setting

- Comprehensive Risk Assessments: Evaluate potential legal and regulatory risks before, during, and after acquisitions.

- Develop Contingency Plans: Prepare for possible legal challenges with strategies to mitigate impacts.

Performance

- Ensure Compliance: Adhere strictly to all legal requirements throughout the acquisition process.

- Effective Integration Plans: Plan integrations carefully to minimize disruptions and align operations.

Review and Revision

- Post-Merger Audits: Review acquisitions post-completion to identify and address any regulatory issues.

- Adjust Acquisition Strategies: Modify approaches based on experiences and changing regulatory landscapes.

Information, Communication, and Reporting

- Engage with Regulators: Proactively communicate with regulatory bodies to address concerns.

- Inform Stakeholders: Keep investors and employees informed about acquisition developments and any associated risks.

Conclusion

Applying the COSO ERM framework allows Microsoft to comprehensively address each identified risk by integrating risk management into every aspect of the organization. By strengthening governance, aligning strategies, enhancing performance, continuously reviewing processes, and effectively communicating, Microsoft can mitigate these risks. These efforts not only protect the company's assets and reputation but also support its strategic objectives and long-term success in a dynamic business environment.

- .NET 9.x SDK Installed: https://dotnet.microsoft.com/en-us/download/dotnet/9.0

- Azure OpenAI API Access: (OpenAI Access will work as well) either o1-mini/o1 and GPT-4o models deployed and API key

- Visual Studio 2022(+) if debugging the solution with an IDE

git clone https://github.com/bartczernicki/RiskAnalysisWithOpenAIReasoning.git

Add this to the Secrets.json (Right Click on VS Project -> Manage User Secrets) and run the console application

{

"AzureOpenAI": {

"AzureOpenAIType": "PAYGO",

"o1Endpoint": "https://YOURAZUREOPENAIENDPOINT.openai.azure.com/"

"o1ModelDeploymentName": "o1-preview",

"o1APIKey": "YOURAZUREOPENAIKEY",

"gpt4oEndpoint": "https://YOURAZUREOPENAIENDPOINT.openai.azure.com/"

"gpt4oModelDeploymentName": "gpt-4o-2024-08-06-global",

"gpt4oAPIKey": "YOURAZUREOPENAIKEY"

}

}