Spark Monitor Fork - A fork of SparkMonitor that works with multiple Spark Sessions

|

+ |  |

= |  |

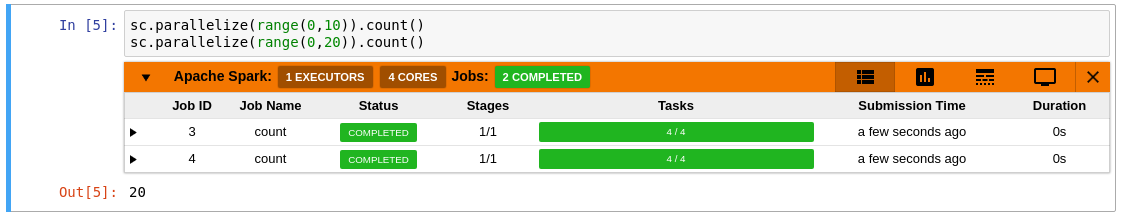

- Automatically displays a live monitoring tool below cells that run Spark jobs in a Jupyter notebook

- A table of jobs and stages with progressbars

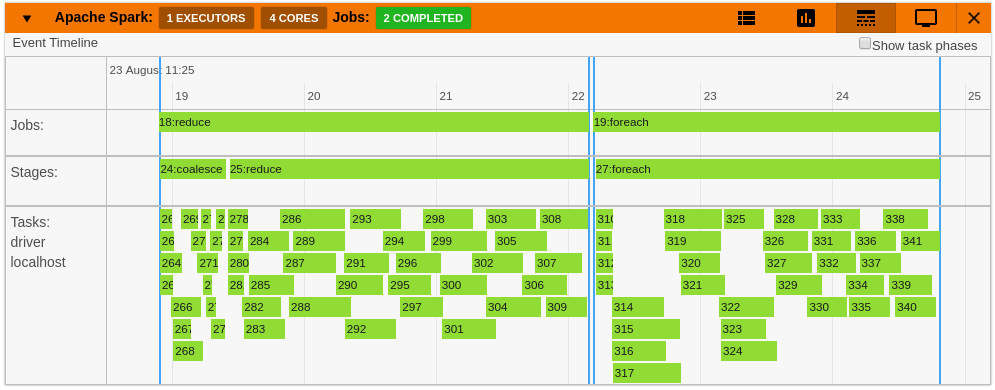

- A timeline which shows jobs, stages, and tasks

- A graph showing number of active tasks & executor cores vs time

- A notebook server extension that proxies the Spark UI and displays it in an iframe popup for more details

- For a detailed list of features see the use case notebooks

- How it Works

|

|

|

|

|

|

npm version: 5.6.0 yarn version: 1.22.4 sbt version: 1.3.2

cd sparkmonitor/extension

#Build Javascript

yarn install # Only need to run the first time

yarn run webpack

#Build SparkListener Scala jar

cd scalalistener/

sbt packagedocker build -t sparkmonitor .

docker run -it -p 8888:8888 sparkmonitorcd sparkmonitor/extension

vi VERSION # bump version number

python setup.py sdist

twine upload --repository-url https://upload.pypi.org/legacy/ dist/*If twine upload step fails, run rm -rf dist/*, bump the VERSION number, and rerun steps above.

pip install sparkmonitor-s

jupyter nbextension install sparkmonitor --py --user --symlink

jupyter nbextension enable sparkmonitor --py --user

jupyter serverextension enable --py --user sparkmonitor

ipython profile create && echo "c.InteractiveShellApp.extensions.append('sparkmonitor.kernelextension')" >> $(ipython profile locate default)/ipython_kernel_config.pyFor more detailed instructions click here

At CERN, the SparkMonitor extension would find two main use cases:

- Distributed analysis with ROOT and Apache Spark using the DistROOT module. Here is an example demonstrating this use case.

- Integration with SWAN, A service for web based analysis, via a modified container image for SWAN user sessions.